MASTER'S CAPSTONE

Designing and developing Cloak, a "privacy

spellchecker" for chatbot conversations

TEAM

1 product designer

2 product managers

2 software engineers

TIMELINE

Spring 2025, 15 weeks

ROLE

Product Designer

SUMMARY

Meet Cloak, a digital privacy tool built for the age of AI.

This capstone project was the culmination of my graduate studies at UC Berkeley. As the product designer, I collaborated with two product managers and two software engineers.

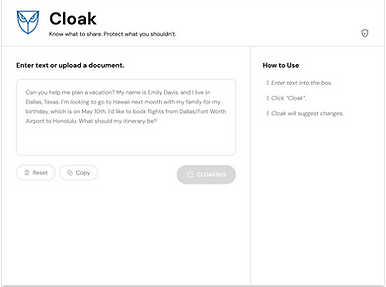

In one semester, our team designed, developed, and launched Cloak, a browser-based privacy tool that helps users protect personal information while interacting with chatbots.

CONTEXT

As the use of AI tools becomes more widespread, concerns about data privacy are only growing.

of U.S. adults under 30 have used ChatGPT (Pew Research)

58%

63%

of consumers believe GenAI might expose their data through security breaches or misuse (IAPP)

DISCOVERY RESEARCH

We set out to explore how people think and feel about AI and privacy. Our research revealed three unique mindsets.

KEY TAKEAWAYS: MINDSETS

01. UNAWARE AND UNCONCERNED

These users don't see privacy as a pressing issue and rarely take protective action.

02. AWARE AND CONCERNED,

BUT OVERWHELMED

These users want to stay safe, but feel overwhelmed by complex settings and legal jargon. They tend to take action reactively, only after a violation has occurred.

03. AWARE, CONCERNED, PREPARED

These users are highly engaged with digital privacy. They seek out privacy-first tools and have considered how AI introduces new privacy risks.

We chose to focus on the second and third groups because they already care about privacy. What they want is clearer guidance and more accessible tools to take action.

RESEARCH METHODS

EXPERT INTERVIEWS

To better understand the field, we first interviewed professionals with diverse backgrounds in privacy and cybersecurity.

Privacy and data policy researcher @ Stanford

Research director of privacy-focused group

VP of engineering for cybersecurity product

Senior technologist for privacy nonprofit

Product designer for privacy software

Founder and CEO of privacy consulting firm

"DIGITAL CITIZEN" INTERVIEWS

We also interviewed "digital citizens", or regular technology users with varying degrees of concern for digital privacy.

MARKET ANALYSIS

To understand gaps in the personal privacy market, we created a competitive analysis of existing privacy tools.

LITERATURE REVIEW

Conducting a literature review of privacy and cybersecurity research helped ground the project in foundational privacy theory.

JOURNEY MAPPING

Based on our interview insights, we tracked the typical journey of someone using an AI chatbot.

Phase

RECOGNIZE

DECIDE

ENGAGE

COMMIT

REFLECT

Actions

-

Struggles to polish a work email

-

Knows the email contains sensitive company data

-

Thinks about using an AI tool for help

-

Choses ChatGPT based on reputation and convenience

-

Opens ChatGPT

-

Pastes email draft into ChatGPT

-

Adds a prompt: “Can you make this more professional?”

-

Wonders if it's safe to share with AI tools

-

Reviews and edits ChatGPT's response

-

Sees improvements and feels reassured

-

Sends email

-

Closes ChatGPT

-

Feels lingering doubt about whether it was safe to use

Thoughts

& Feelings

Regretful

“I probably shouldn't have shared that. What if it's stored somewhere?"

Relieved

“Okay, this sounds way better.”

Uneasy

“Should I take out my coworkers’ names? Oh well, I'm in a rush."

Hopeful

“I’ll just drop it into ChatGPT. Everyone uses it.”

Hesitant

"I really need this email to sound right."

THE PROBLEM SPACE

The problem?

It's too easy to overshare with AI and regret it later.

OUR OBJECTIVE

How might we make privacy feel effortless and proactive during AI-assisted tasks?

OUR IDEA

Cloak: a tool that redacts personal information from AI prompts automatically and intelligently.

IDEATION PROCESS

With our guiding questions in mind, I led ideation.

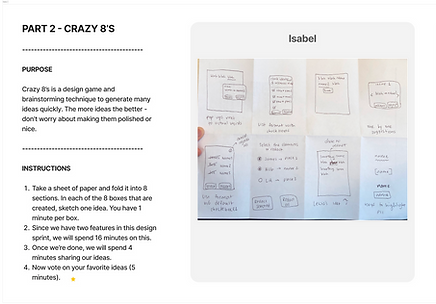

DESIGN WORKSHOP

First, I planned a design workshop to introduce my team to design thinking principles and collaborative exercises.

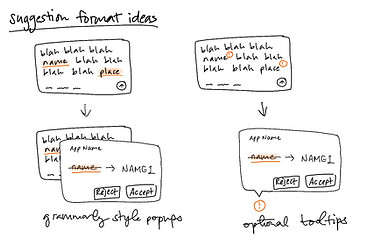

SKETCHING

After the workshop, I sketched some potential features to help the team visualize and explore different ideas.

JOURNEY MAPPING (PART 2)

What does a safer AI workflow look like with Cloak?

Translating our ideas into a revised user journey showed where and how our product might fit into someone's workflow.

Phase

RECOGNIZE

DECIDE

ENGAGE

COMMIT

REFLECT

Actions

-

Struggles to polish a work email

-

Knows the email contains sensitive company data

-

Thinks about using an AI tool for help

-

Choses ChatGPT based on reputation and convenience

-

Opens ChatGPT

-

Opens Cloak

-

Pastes email draft into Cloak

-

Cloak redacts personal data

-

Pastes redacted email into ChatGPT

-

Adds a prompt: “Can you make this more professional?”

-

Reviews and edits ChatGPT's response

-

Sees improvements and feels reassured

-

Uses Cloak to reveal (or Uncloak) redacted terms

-

Sends email

-

Closes ChatGPT

-

Feels confident that personal data was not shared with ChatGPT

Thoughts

& Feelings

Hopeful

“I’ll use Cloak to clean it up, then send it to ChatGPT.”

Hesitant

"I really need this email to sound right."

Empowered

“Nice, Cloak redacted my coworkers' names for me."

Relieved

“Okay, this sounds way better.”

Relaxed

“That was helpful, and I only shared what I needed to."

DESIGN CONSIDERATIONS

While building Cloak, we worked through a few critical issues that could impact user trust and experience.

01. HOW DO WE REDACT WORDS WHILE PRESERVING THE MEANING OF USER PROMPTS?

1. User sends their

prompt to Cloak

2. Cloak sends prompt

to local server

4. Server sends redacted

words back to Cloak

3. Server gets redacted

words from local LLM

Cloak uses a local large language model to analyze each prompt and redact only what’s unnecessary, preserving the prompt's meaning and intent. The LLM runs entirely on the user's device, so no data is sent to external servers or AI companies.

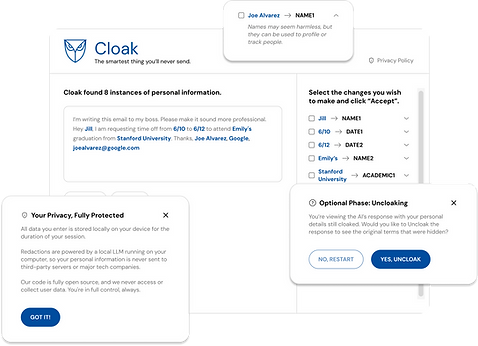

02. HOW DO WE MAKE THE REDACTION PROCESS FEEL VISIBLE AND TRUSTWORTHY?

For every redaction, Cloak shows what type of personal information was removed and why. This gives users clear proof that their data is being protected and that the system is working as expected.

03. HOW DO WE KEEP REDACTION FROM SLOWING DOWN EVERYDAY WORKFLOWS?

We didn't want temporarily hiding personal data to create extra steps later. The Uncloaking feature lets users quickly restore redacted terms after getting a response from their AI tool, so tasks like editing emails or resumes aren’t disrupted.

04. HOW DO WE ENSURE PRIVACY IS BUILT INTO EVERY FACET OF OUR PRODUCT?

To align with privacy-by-design principles, we built Cloak so all user data stays local. The redaction process runs entirely on a local LLM, with no information collected or stored. The project is also fully open source.

USABILITY TESTING & ITERATIONS

Then, it was time to test our product with real users.

While our engineers finalized the back end, I led usability tests to evaluate how well Cloak communicated its purpose, how easy it was to use, and how it impacted user confidence. Feedback was positive overall, but a few usability issues surfaced.

01. USERS FOUND THE CLOAKING PROCESS SLOW, CAUSING CONFUSION

One user blamed himself when the system made a redaction mistake, highlighting the need for clearer system feedback. To manage expectations around speed and accuracy, I introduced a loading screen with helper text reassuring users that the system is working.

Previous Version

Loading Spinner

New Version

Loading Screen

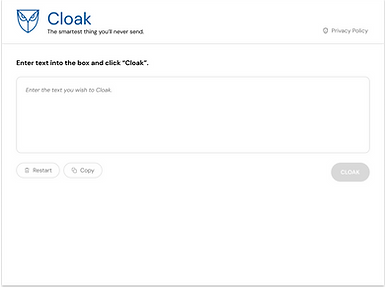

02. USERS OVERLOOKED INSTRUCTIONS IN THE ORIGINAL TWO-COLUMN LAYOUT

To improve visibility and guidance, I switched to a single-column layout and placed instructions directly above key actions. This made the guidance more noticeable, freed up screen real estate for user input, and reduced visual clutter.

Previous Version

Two-Column Layout

New Version

One-Column Layout

PRODUCT DEMO

See the final version of Cloak in action!

IMPACT

When using Cloak, people estimated sharing 56% less personal data with chatbots.

While we couldn't measure the actual amount of personal data collected by chatbots, we still wanted to assess Cloak's perceived value. We asked users to estimate how much personal data they share with chatbots, both with and without Cloak.

WHAT ONE USER HAD TO SAY

"A feature like this is important or even necessary... Generative AI platforms [feel] like a black box. You're like, where is this data going? Cloak brings peace of mind for sure."

REFLECTION

Here's what I learned and what I'd explore next.

This was my first project working as the sole designer within a cross-functional team. Taking full ownership of the design process from concept through launch challenged me to grow in new ways. I see several opportunities to expand on our work.

Reaching people who don't care (yet)

Currently, our product speaks to users who value privacy but aren't sure how to protect it. Next, I’d explore how to market Cloak to people who don’t yet see privacy as a problem.

Expanding Cloak's capabilities

Although it didn't fit into the scope of our MVP, we developed a method for redacting personal data from PDFs. Implementing this feature would expand Cloak's utility beyond text-based inputs.